By Emmanuel Benhamou, Managing Director at EAIGG and Ask Tutika, Head of Operations at EAIGG

In enterprise AI, the hardest work often begins after the first proof-of-concept succeeds. Procurement, compliance, and integration can stretch for months or even years, testing the patience of committed champions on both sides. Without a deployment model designed for AI’s iterative and adaptive nature, momentum often dissipates before the technology delivers its promised value. Winning the deployment game requires moving beyond transactional procurement toward a model of collaborative, ethical co-innovation that embeds trust, clarity, and adaptability from the outset.

Building Trust and Ethical Alignment

Trust is the foundation of any successful AI engagement, and it must be established from the very beginning. Enterprises now expect verifiable commitments around fairness, transparency, explainability, and rigorous compliance — not afterthought assurances. For startups, this means arriving prepared with privacy impact assessments, algorithmic audits, bias detection methodologies, and robust data governance frameworks as core elements of the offer.

Interpretability is especially critical. Opaque, “black box” systems fuel resistance and regulatory delays, particularly in high-stakes sectors. Models should be explainable by design, enabling users and auditors alike to understand, validate, and, when necessary, challenge outputs. Strong data governance is equally important. Many enterprises are hampered by siloed data architectures and rigid governance processes; collaborative redesigns that preserve privacy while enabling secure access can unlock entirely new opportunities for AI integration.

The payoff is clear in the insurance sector. When Earnix acquired Zelros, it gained more than a recommendation engine — it acquired a platform in which explainability, fairness, and regulatory compliance were embedded from the start. By surfacing the rationale behind underwriting decisions in ways that satisfied customers and regulators, Zelros accelerated deployment timelines and deepened trust. Here, ethics was not a box-ticking exercise; it was a source of competitive advantage.

Trust also grows when AI is positioned as an augmentative force for human capability rather than a replacement for it. Quantifying this effect through the Human-AI Augmentation Index (HAI) or similar frameworks turns abstract values into measurable outcomes. Demonstrating tangible gains in productivity, decision quality, and innovation capacity reinforces the view of AI as a co-pilot — one that empowers employees to move from manual execution to orchestrating intelligent workflows.

Aligning on Value and Securing Sponsorship

Even with trust in place, misaligned expectations can derail adoption. Ambiguous success metrics leave projects vulnerable to shifting interpretations of progress. From the outset, enterprises and startups must agree on measurable KPIs that address both business and technical objectives. This early alignment creates joint accountability and ensures that performance is evaluated against criteria everyone accepts.

Clear ROI pathways are equally critical. In the absence of a strong financial and strategic case, even technically sound initiatives can lose internal momentum. ROI should be articulated in terms that resonate across stakeholder groups — procurement, compliance, business units, and the C-suite — linking early wins to long-term competitive advantage.

High-level sponsorship makes these agreements durable. Executive champions provide the “air cover” that shields internal innovation teams from shifting priorities and political resistance. Effective sponsorship is not passive endorsement; it requires structured engagement through regular briefings, visible advocacy, and consistent alignment with enterprise strategy. Without it, projects can be quietly deprioritized despite early success.

Bridging Procurement and Execution

Procurement teams are wired to reduce risk; innovation teams are wired to pursue it. In AI deployment, this tension can stall progress unless both sides adopt new ways of working together. Rigid, fixed-scope contracts rarely fit AI’s evolving nature. Adaptive contracting, phased rollouts, and iterative milestones allow the solution to mature while maintaining oversight.

Proof-of-concepts must be designed as stepping stones to production, not isolated experiments. That means defining clear objectives, realistic timelines, and precise success criteria from the start. Regular evaluation points should focus on scalability potential, not just short-term performance. Without this discipline, organizations risk “innovation theater” — a cycle of pilots that generate attention but never operational value.

Integrating with Precision and Iterating Continuously

Technical integration is often the steepest hill to climb. Legacy systems can resist change, and AI systems must continue to evolve after initial deployment. Startups can ease this process by providing modular integration kits, pre-built connectors, robust APIs, and clear documentation — reducing both deployment time and complexity.

Increasingly, AI-native companies are also embedding Forward Deployed Engineers (FDEs) within enterprise teams. Inspired by Palantir’s model and now employed by Scale AI and Cohere, these engineers work alongside end users to uncover edge cases and operational nuances invisible to standard requirements gathering. Insights gathered in the field are then translated into modular, reusable features that strengthen the product across the customer base.

Lyzr.ai illustrates the power of this approach. Approved for internal deployment across multiple JPMC units, its onboarding agents cut corporate account opening time by 90% while improving compliance via retrieval-augmented generation, multi-LLM verification, and deterministic logic. By aligning its co-pilot model with workforce sentiment and meeting governance requirements upfront, Lyzr built internal advocacy and scaled seamlessly.

To sustain such performance, enterprises must invest in infrastructure for continuous iteration. Test environments, real-time feedback systems, and iterative deployment frameworks enable refinement without disrupting live operations — turning initial wins into long-term advantage.

Assessing Readiness and Acting on It

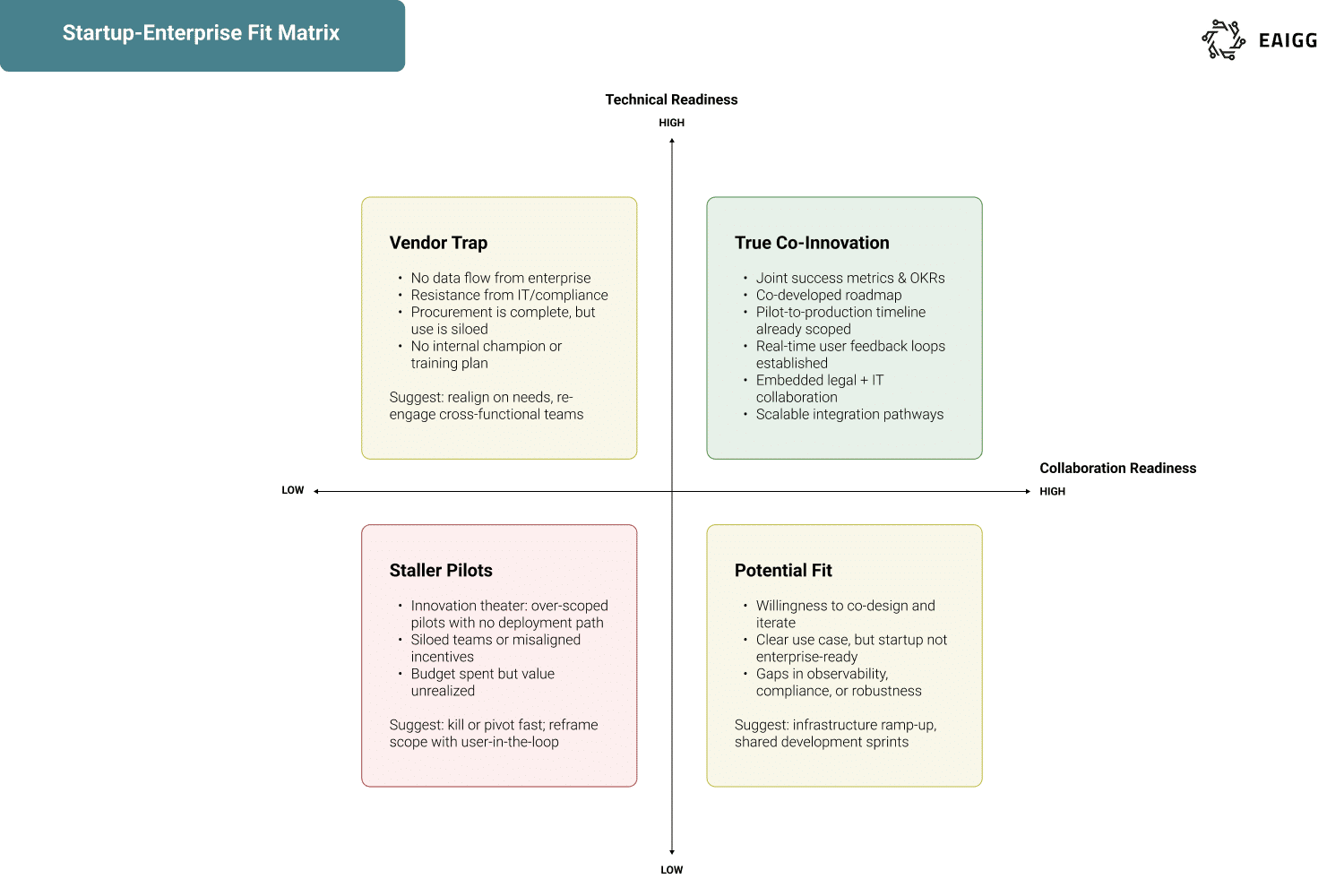

Deployment success depends on readiness on both sides. The Startup–Enterprise Ethical Fit Matrix offers a lens for this assessment:

- True Co-Innovation: Clear ethical roadmaps, joint metrics, continuous feedback, scalable deployments.

- Potential Fit: Fundamental alignment but requires additional joint investment.

- Vendor Trap: Strong technology, but blocked by enterprise misalignment or weak advocacy.

- Stalled Pilots: Unclear ownership, incoherent metrics, or no scaling plan.

On the enterprise side, readiness includes strategic alignment, process maturity, executive sponsorship strength, decision-making autonomy, and performance metrics that capture both operational results and human impact. Startups must demonstrate ethical modularity, system observability, compliance adherence, security robustness, and adaptive integration capabilities.

For Enterprises: embed agile, ethics-driven procurement, empower innovation champions, operationalize comprehensive metrics, design ethical sandboxes, and streamline secure data access for collaborators.

For Startups: align offerings to ethical and business outcomes, co-develop PoC criteria, supply compliance documentation proactively, build modular integration toolkits, maintain regular stakeholder engagement, and adopt transparent commercial models. Winning the deployment game is about more than passing procurement once. It is about creating the conditions for trust, shared value, and adaptability to reinforce one another over time. When enterprises and startups commit to ethics by design, alignment on measurable outcomes, bridging cultural divides, and integration with both speed and governance, they move beyond pilots into sustained, scalable impact. In a market where speed, trust, and adaptability define the winners, there is no greater competitive edge.